Big bux hidden beneath wine-dark sea as we wait for the Tides to go out?

Wikimedia Endowment transparency – a year on, nothing seems to have changed

The Wikimedia Foundation has long promised that the Wikimedia Endowment – held by the Tides Foundation and managed as an opaque, organisationally completely separate entity by a board led by Jimmy Wales – would soon be transferred to a financially transparent 501(c)(3) organisation. These promises date back to 2017 (see Signpost coverage this year and last year).

In April 2021, Endowment Director Amy Parker and Director of Development Caitlin Virtue again said on Meta:

"We are in the process of transitioning the Endowment to a new US 501c3 charity, after which it will begin making grants and will publish its own Form 990. ... As we approach the $100 million funding milestone, we are in the process of establishing the Endowment as a separate 501c3. ..."

"We are in the process of establishing a new home for the endowment in a stand-alone 501(c)(3) public charity. We will move the endowment in its entirety to this new entity once the new charity receives its IRS 501(c)(3) determination letter."

A full year has now passed since that 501(c)(3) determination letter (pictured) was received in June 2022. Yet the money has still not been transferred. This means that another year will have passed without public reporting on the Endowment's revenue and expenses, as it is organisationally separate from the Wikimedia Foundation (its revenue and assets are not included in WMF revenue and assets) and the Tides Foundation does not provide any such reporting either.

In response to an inquiry on the Wikimedia mailing list, WMF Chief Financial Officer Jaime Villagomez recently posted the following update on Meta:

Work is underway to move the Endowment assets out of Tides to its own charity. The transition is complex, due to the nature of banking activities and donor commitments so we cannot instantaneously move from one entity to the other. We anticipate that it will take a few more weeks to transfer most of our transactional and banking activity away from Tides. We will maintain the old endowment accounts to process residual income (such as dividend payments) for some months before we close those accounts. More importantly though, we will also be sharing an update on the Endowment's activities in FY 22-23 in the annual fundraising report to be published in the next quarter.

— User:JVillagomez (WMF)

In addition, WMF CEO Maryana Iskander and WMF board member Nataliia Tymkiv said on the Wikimedia mailing list:

The Board of Trustees will meet next on August 15 in Singapore. Following this meeting, there will also be an open session with the Wikimedia Foundation and Endowment Boards during Wikimania to answer questions on these topics or others you may have.

The Wikimedia Endowment holds a significant proportion of all the funds the public has ever donated to the Wikimedia cause. Yet it does not follow the same standards of transparency that apply to other parts of the movement. For example, it would be unimaginable for any WMF affiliate to ingest over $100 million over the best part of a decade without ever publishing audited accounts detailing revenue and expenses.

Why should the Wikimedia Endowment be different? – AK

Wikimania scholarships

The Wikimania Scholarship outcomes were recently published on the Wikimania site. According to the summary provided there,

We had a total of 3800 applications started, after removing spam and incomplete applications we had 1209 actual applications for review. These application were then grouped according to regions.

| Region | # of applications | # approved |

|---|---|---|

| Africa, Sub-Saharan | 490 | 38 |

| Central and Eastern Europe | 78 | 17 |

| Central Asia | 55 | 8 |

| East, Southeast Asia, and the Pacific | 150 | 53 |

| Middle East and North Africa | 82 | 16 |

| North America | 51 | 12 |

| South America | 68 | 15 |

| Sub-continental Asia | 144 | 20 |

| Western Europe | 91 | 18 |

| Totals | 1209 | 197 |

This means that less than one in six scholarship applications was approved. To one editor, at least, this seemed a "paltry" amount of funding:

I find it rather disgraceful that the Wikimedia Foundation accepted only 197 of the 1209 completed scholarship applications for this year's Wikimania conference, or 16%. While I recognize that travel scholarships aren't cheap, I presume that a sizable portion of the applicants are heavily involved in Wikimedia projects, devoting many hours a week to volunteer work. Wikimania scholarships are one of the few ways the WMF can use its ample financial resources to show tangible appreciation to volunteers and aid participation in the movement. You could have afforded to assist more than 16% of applicants, and it's disappointing that you deemed the expense not worthwhile when you put together your budget.

— User:Sdkb

A WMF spokesperson responded by saying:

The Foundation sponsors the whole event—not just the scholarships—and in this year's Annual Plan, despite reducing expenses across the Foundation, funding for Wikimania increased. Not to undermine any disappointment that any applicant may feel for not having been selected, of course that’s completely valid and understandable, but it does feel relevant to mention that this year there are ~200 scholarships, around 66% more than the ~120 from the last in-person Wikimania in 2019. Together with each year’s Core Organizing Team, the Foundation always thinks about how to spend the funds to reach the most Wikimedians possible, because we completely agree with you that recognizing people for their contributions is critical. This year, that meant increasing the number of scholarships that could be awarded by the volunteer subcommittee, working to keep virtual registration free despite the costs of the virtual event, and working to keep the in-person ticket subsidized. I know it's of course still disappointing for anyone who wanted to attend in person and didn’t get selected. I really do hope those people will consider applying again for future Wikimanias.

— User:ELappen (WMF)

User:Sdkb seemed unimpressed. – AK

Gitz6666 unglocked

In a rare reversal, User:Gitz6666 had his global lock overturned after lodging an appeal with the stewards. Gitz6666 had been indefinitely blocked on the Italian Wikipedia in May, along with another user, and then had his account globally locked by an Italian steward.

The underlying dispute concerned a sociologist's Italian Wikipedia biography that had attracted press attention for its alleged unfairness (see previous Signpost coverage). – AK

Brief notes

- New administrators: The Signpost welcomes the English Wikipedia's newest administrator, Firefangledfeathers.

- Articles for Improvement: This week's Article for Improvement (beginning 17 July) is Religious philosophy. It will be followed the week after by Act (drama). Please be bold in helping improve these articles!

Tentacles of Emirates plot attempt to ensnare Wikipedia

Agency's leaked emails provide rare glimpse into use of Wikipedia in a "smear campaign" financed by the ruler of the United Arab Emirates

An investigative article in The New Yorker, titled "The Dirty Secrets of a Smear Campaign", describes how "Sheikh Mohammed bin Zayed, the ruler of the United Arab Emirates, paid a Swiss private intelligence firm millions of dollars to taint perceived enemies". Most of the lengthy article (whose audio version runs 1 hour and 13 minutes) isn't about Wikipedia, but there are several paragraphs about how the firm ("Alp Services", founded by an investigator named Mario Brero) used it for their purposes alongside many other interesting tools (such as illegitimately obtaining phone call records or tax records of their targets, and planting stories in various news outlets).

The first part is about an American oil trader named Hazim Nada, founder of a company called Lord Energy:

On January 5, 2018, Sylvain Besson, a journalist who had written a book purporting to tie [Hazim Nada's father] Youssef Nada to a supposed Islamist conspiracy, published an article, in the Geneva newspaper Le Temps, claiming that Lord Energy was a cover for a Muslim Brotherhood cell. “The children of the historical leaders of the organization have recycled themselves in oil and gas,” Besson wrote. A new item in Africa Intelligence hinted darkly that Lord Energy employees had “been active in the political-religious sphere.” Headlines sprang up on Web sites, such as Medium, that had little editorial oversight: “Lord Energy: The Mysterious Company Linking Al-Qaeda and the Muslim Brotherhood”; “Compliance: Muslim Brotherhood Trading Company Lord Energy Linked to Crédit Suisse.” A Wikipedia entry for Lord Energy [probably fr:Lord Energy, created by a single-purpose account (SPA) in June 2018] suddenly included descriptions of alleged ties to terrorism.

This outside view from the victim's perspective is later matched to what the reporter learned from leaked/hacked internal emails of "Alp Services":

In February, 2018, [Brero] asked for more money to expand his operation against Nada, and proposed “to alert compliance databases and watchdogs, which are used by banks and multinationals, for example about Lord Energy’s real activities and links to terrorism.” His “objective,” he explained, was to block the company’s “bank accounts and business.” [...]

Alp quickly put the Emiratis’ money to work. An Alp employee named Raihane Hassaine e-mailed drafts of damning Wikipedia entries. On an invoice dated May 31, 2018, the company paid Nina May, a freelance writer in London, six hundred and twenty-five pounds for five online articles, published under pseudonyms and based on notes supplied by Alp, that attacked Lord Energy for links to terrorism and extremism. (Hassaine did not respond to requests for comment. May told me that she had worked for Alp in the past but had signed a nondisclosure agreement.)

And:

Alp operatives bragged to the Emiratis that they had successfully thwarted Nada’s efforts to correct the disparaging Lord Energy entry on Wikipedia. “We requested the assistance of friendly moderators who countered the repeated attacks,” Brero wrote in an “urgent update” to the Emiratis in June, 2018. “The objective remains to paralyze the company.” To pressure others to shun Lord Energy, Alp added dubious allegations about the company to the Wikipedia entries for Credit Suisse [presumably corresponding to this July 2019 edit by a SPA, subsequently removed in January 2021] and for an Algerian oil monopoly [possibly Sonatrach, referring to these edits - which were removed on English Wikipedia after the publication of the New Yorker article].

And regarding another target:

Brero’s campaign sometimes involved secret retaliation. In a 2018 report, a U.N. panel of human-rights experts concluded that the U.A.E. may have committed war crimes in its military intervention in Yemen. The Emiratis commissioned Brero to investigate the panel’s members, especially its chairman, Kamel Jendoubi, a widely admired French Tunisian human-rights advocate. [...] “Today, in both Google French and Google English, the reputation of Kamel Jendoubi is excellent,” Brero noted in a November, 2018, pitch to the Emiratis. “On both first pages, there is not a single critical article.” Within six months, Brero promised, Jendoubi’s image could be “reshaped” with “negative elements.” The cost: a hundred and fifty thousand euros.

Rumors spread through Arab news outlets and European Web publications that Jendoubi was a tool of Qatar, a failed businessman, and tied to extremists. A French-language article posted on Medium suggested that he might be “an opportunist disguised as a human-rights hero.” An article in English asked, “Is UN-expert Kamel Jendoubi too close to Qatar?” Alp created or altered Wikipedia entries about Jendoubi, in various languages, by citing claims from unreliable, reactionary, or pro-government news outlets in Egypt and Tunisia.

Jendoubi told me that he’d been perplexed by the flurry of slander that followed the war-crimes report. “Wikipedia is a monster!” he told me. He had managed to clean up the French entry, but the English-language page still stymied him. He said, “You speak English—can you help?”

On fr:Kamel Jendoubi, a "Controverses" section was added by a SPA in January 2019, and expanded by another SPA in August 2019. Most of it was deleted in April/May 2021, by an account with only one earlier edit, and then by an experienced editor. Around the same time, the English Wikipedia's article Kamel Jendoubi likewise saw an attempt by an IP editor to remove similar information, which was reverted as "Likely censorship of content"; although a July 2022 edit that provided a more detailed rationale for a more limited removal was successful.

The New Yorker article was published in April. Its findings were put into a much wider context earlier this month when various European news media collaborating in the European Investigative Collaborations (EIC) network reported on the results of an investigation dubbed "Abu Dhabi Secrets", revealing that

[...] Alp Services has been contracted by the UAE government to spy on citizens of 18 countries in Europe and beyond. Alp Services has sent to the UAE intelligence services the names of more than 1000 individuals and 400 organizations in 18 European countries, labelling them as part of the Muslim Brotherhood network in Europe.

This investigation was based on a stash of "78,000 confidential documents obtained by the French online newspaper Mediapart", according to Middle East Eye, which summarized the modus operandi of the campaign as follows:

Alp Services - and Brero - were paid tens of thousands of euros per individual targeted, according to Le Soir. The Swiss group then produced reports on the identified individuals.

[...]

Once the information was sent over to Emirati intelligence services, agents were able to target the individuals further through press campaigns, forums published about them, the creation of fake profiles and the modification of Wikipedia pages.

Many or most of the news reports emanating from the collective EIC investigation don't seem to have focused on the Wikipedia angle. Still, the Spanish publication Infolibre reveals some further details, quoting from messages where Alp's paid Wikipedia editors report about their efforts to their Emirati clients, in particular edits (presumably including these) on the English and Spanish Wikipedia to connect Mohammed Zouaydi (known as the "Al Qaeda's financier") to the Muslim Brotherhood. On the Spanish Wikipedia, they claim to have entered "an intense battle with pro-Muslim Brotherhood elements who wanted to censor information about the Brotherhood and its links to Al Qaeda" (translated back from Spanish).

At the French Wikipedia's "Projet Antipub", editors are currently looking into various other articles and accounts that may be connected to the campaign. – H

Ruwiki

The Telegraph reports (non-paywalled) on the founding of Ruwiki (see previous Signpost coverage):

Wikipedia's top editor in Russia has quit the online encyclopaedia to launch a rival service sympathetic to Vladimir Putin.

Vladimir Medeyko, the long-serving leader of Wikipedia editors in the country, has copied the website's existing 1.9 million Russian articles into a new Kremlin-approved version.

The creation of the new service, called Ruwiki, was announced by a State Duma deputy from Putin's political party.

It comes as the Russian leader steps up efforts to censor coverage of the war in Ukraine, amid growing signs of discontent at home. [...]

According to The Telegraph, Ruwiki lacks specific content compared to the Wikipedia version:

The Ruwiki entry for Ukraine makes no mention of Russia's invasion or the international support for Kyiv's resistance.

The service has also chosen not to replicate articles on Yevgeny Prigozhin’s attempted coup against Putin.

In related news, on July 5 between around 2 am and 4 am Moscow time, access to Wikipedia and other "Western internet services" including Google was temporarily disrupted as Russian authorities tested the country's "Sovereign Internet system", as reported in a Twitter thread by Access Now staff member Natalia Krapiva. – AK, H

In brief

- Wiki wars: A BBC radio programme presented by Lara Lewington tries to explain how the Wikipedia sausage is made, touching on hoaxes and Things Gone Wrong like the Croatian and Scots Wikipedias. The programme contains two gross errors – it claims that IP editors lost the ability to edit biographies of living people after the Seigenthaler incident (ahem) and that paid editors are forbidden from editing articles directly (they are only "very strongly discouraged" from doing so).

- TEDx talk: Annie Rauwerda (of Depths of Wikipedia) gave a TEDx talk titled "Why an encyclopedia is my favorite place on the Internet". Watch the recording to find out which encyclopedia it is.

- Jimmy Wales interview: The "co-founder of Wikipedia" was interviewed by Lex Fridman on his popular podcast (video, transcript), for three hours and 15 minutes. One user compiled some excerpts they found especially interesting from a Wikipedian perspective, and journalist Stephen Harrison (known for his Wikipedia column in Slate) summarized several points from the interview in a Twitter thread, e.g. about Wales "push[ing] back on Wikipedia’s alleged left wing bias."

David Thomsen (Dthomsen8) and Ingo Koll (Kipala)

David Thomsen (Dthomsen8)

David died on November 25, 2022, at the age of 83.[1] He was a prolific editor and a self-declared gnome who added to articles on Philadelphia, United States, and created many new articles.[2] David lived in Fairmount, Philadelphia. After earning a degree at Lafayette College and serving for two years with the US Army in West Germany, he worked for 27 years as a computer programmer for Sunoco.[2][3]

David began his post-retirement Wikipedia career on March 13, 2008; five years later, he had made 100,000 edits, mostly through wikignoming. As of this issue, he is the 52nd-most-active editor in Wikipedia. David was a member of the Guild of Copy Editors, and took part in its monthly drives and blitzes. He was also part of the Article Rescue Squadron, saving uncited-but-promising articles from deletion.[2] David was openly proud of his Wikipedia-editing activities, and he even designed his own Wikipedia-themed caps, which he wore to Philadelphia wiki-meetups and gave away to his fellow Wikipedians.[2] Outside Wikipedia, David was a member of the Historical Society of Pennsylvania.[3]

Dthomsen8 has left an indelible legacy at Wikipedia, and the encyclopedia and editing community are poorer for his loss. Messages of condolence can be left on his talk page.

Ingo Koll (Kipala)

Wikimedia Tanzania and Jenga Wikipedia ya Kiswahili have published a tribute to User:Kipala, a much-loved German Wikimedian who – as a fluent Swahili speaker – was particularly active in the African Wikimedia community (see also Wikimedia-l thread).

Ingo was an important stakeholder of Swahili/Kiswahili Wikipedia whose immeasurable contribution will not be forgotten. We will always remember our beloved Ingo Koll for many things, especially his significant contribution to editing, building, and maintaining Wikipedia Kiswahili, Wikimedia Tanzania and Jenga Wikipedia Kiswahili. He was a selfless person who gave his all whenever needed. His sudden death is a big blow to the Swahili/Kiswahili Wikipedia community and Wikimedia Tanzania.

He was a wonderful teacher who wanted to see Kiswahili grow and be increasingly used in education. He was committed to spreading free knowledge through Wikimedia projects and programs. His exemplary work is seen in radio programs such as “Macho Angani” and his remarkable production of Astronomy topics into Swahili language “Astronomia kwa Kiswahili”. Ingo loved everyone who fondly called him Babu Ingo or Kipala. We will honor him by keeping his values of hard work, selfless spirit, truthfulness and going all the way always. May he rest in eternal peace. Amen.

– AK

- ^ "David Thomsen Obituary 2022". Cremation Society of Philadelphia. November 25, 2022.

- ^ a b c d Schleifer, Theodore (September 2, 2013). "Philadelphian is a king of Wikipedia editors". The Philadelphia Inquirer. Archived from the original on June 30, 2023. Retrieved July 6, 2023.

- ^ a b Ha, Yoona; Wilson, Jacob (October 14, 2014). "On Philadelphia's birthday, a look at how it came alive on Wikipedia".

ABC for Fundraising: Advancing Banner Collaboration for fundraising campaigns

- Julia Brungs is the Lead Community Relations Specialist at the Wikimedia Foundation Advancement department.

At Wikimedia, collaboration is a pillar of everything we do. The Foundation is committed to building more of it into our efforts to raise funds to support our mission. The collaboration process for the 2023 English fundraising campaign is kicking off now, right from the start of the fiscal year.

2022 English Campaign

In December 2022, the English Wikipedia community ran a Request for Comment that underscored the importance of the Foundation’s fundraising team working with volunteers on banner messaging. The team kicked off a collaboration process that resulted in the campaign featuring more than 400 banners that came from the co-creation process with volunteers. The revenue performance of the banners declined significantly last year and resulted in a longer campaign with readers seeing more banners than previous years. The fundraising team learned a lot through the collaboration process and is eager this year to build on this work with volunteers to develop content that will successfully invite donors to support our mission. We aim to reach fundraising targets in ways that minimize the number of banners shown, to limit disruption and resonate with readers and volunteers.

Community collaboration in 2023 fundraising

The fundraising team took the community collaboration model created in the 2022 English campaign and expanded it in 2023 to Sweden, Japan, Czechia, Brazil, and Mexico. In addition to on-wiki collaboration spaces, the team created spaces to increase transparency around the fundraising program and worked with volunteers from each community on local language wikis, on virtual calls, and in other forums. Collaboration between communities and the Foundation early on in the fundraising process is critical. Each community gave their time to discuss their own unique context and input ranging from how to provide a strong local payment experience to the best ways to translate Jimmy’s new message from 2022 for a local audience. The focus for fundraising messaging in the 2023 campaigns was primarily centered on the quality of translations and localization. Affiliates often made up a key part of this process–working to provide localization expertise and also bring others to the table. The team is grateful to everyone who worked together with them on these campaigns, and wants to invite you to participate in the 2023 campaign.

What’s next?

Collaboration for the 2023 English banner campaign is starting now! The community collaboration page for the English campaign launched on Thursday (13th of July), before the start of the first pre-tests, to kick off collaboration right from the start of the year. The page provides information to increase understanding of the fundraising program, background on improvements around community collaborations that have been made since the last campaign, new spaces for collaboration, and gives messaging examples to invite volunteers to share ideas for how we can improve the campaign together in 2023.

Building from the campaigns that have run in the past six months, there will also be collaboration calls for community members to bring their ideas. Foundation staff will be at several in person and virtual community events throughout the upcoming months to open up collaboration opportunities where volunteers are gathering and are happy to come to other volunteer venues.

We will continue to iterate and improve this process in fundraising campaigns going forward. Be part of the conversation and help shape the 2023 campaign.

Background on annual planning

This change in approach to how we raise funds was accompanied by a change in how we spend funds in the annual plan for the 23-24 fiscal year. The Foundation made wider changes in its annual plan. Growth slowed last year compared to the prior three years. We also made internal budget cuts involving both non-personnel and personnel expenses to make sure we have a more sustainable trajectory in expenses for the coming few years. This year's Annual Plan provides more granular information on how the Foundation operates, and recenters Product and Technology work with an emphasis on experienced editors, aiming to ensure that they have the right tools for the critical work they do every day to expand and improve quality content, as well as manage community processes.

To learn more about the Foundation’s annual plan, please see a brief Diff post — or the full annual plan.

Are the children of celebrities over-represented in French cinema?

In December 2022, New York magazine did its cover story about the "nepo babies" of Hollywood.[1] The French cinema industry is also known to be biased in favor of children of celebrities. French journalist Maxime Vaudano published a paper in Le Monde on the topic 10 years ago.[2] More recently, Belgian humorist Alex Vizorek has suggested that there is now lots of children of celebrities in sport but "not as much as in French cinema".[3] It's not difficult to find examples. Louis Garrel and Esther Garrel are the children of Philippe Garrel and Brigitte Sy. Chiara Mastroianni is the daughter of Catherine Deneuve and Marcello Mastroianni. Julie Depardieu is the daughter of Gérard Depardieu. Charlotte Gainsbourg is the daughter of Serge Gainsbourg and Jane Birkin. Mathieu Amalric is the son of Jacques Amalric, etc.[4]

Wikidata provides a fantastic database to test the assumption that the number of children of celebrities is higher in the cinema than in other fields. Using it, I found that a) French actors and French filmmakers have a higher probability to have parents with a Wikipedia article than people with another occupation, b) this pattern holds true for males and females but is more important for women than for men, and c) this pattern is specific to French actors and actresses.[5]

Methodology

I first constructed a SPARQL query which takes all people born after 1970 with an article in Wikipedia in French by occupation and citizenship. I then checked if those people have a father with an article in Wikipedia in French or a mother with an article in Wikipedia in French. I haven't controlled for the occupation of the parents, and assumed that having an article in Wikipedia is a sign of celebrity regardless of occupation and country of citizenship.

My first query was the following:

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

PREFIX schema: <http://schema.org/>

PREFIX wd: <http://www.wikidata.org/entity/>

PREFIX wdt: <http://www.wikidata.org/prop/direct/>

SELECT DISTINCT ?item ?itemLabel ?year ?father ?mother WHERE {

?item wdt:P31 wd:Q5;

wdt:P106/wdt:P279* wd:Q33999;

wdt:P27 wd:Q142;

wdt:P569 ?birthdate;

rdfs:label ?itemLabel FILTER(lang(?itemLabel) = "fr") .

?sitelink schema:about ?item;

schema:isPartOf <https://fr.wikipedia.org/>.

FILTER(YEAR( ?birthdate ) >= 1970 )

BIND(YEAR(?birthdate) AS ?year)

OPTIONAL {

?item wdt:P22 ?father.

?fatherlink schema:about ?father;

schema:isPartOf <https://fr.wikipedia.org/>.

}

OPTIONAL {

?item wdt:P25 ?mother.

?motherlink schema:about ?mother;

schema:isPartOf <https://fr.wikipedia.org/>.

}

}

All other queries are derived from this original query.[6] All computations were done using the Observable platform, which makes it easy to visualize data using JavaScript.[7]

Many more children of celebrities in French cinema than in other occupations

I collected data about people of French citizenship (country of citizenship (P27) is France (Q142)) born after 1970 with an article in the French Wikipedia for several occupations. I assumed that having an article in Wikipedia is a sign of celebrity.

So I chose to look at actor (Q33999), musician (Q639669), writer (Q36180), athlete (Q2066131), politician (Q82955) and film director (Q2526255). For each occupation, I computed the proportion of people whose father or mother, or both, had an article in the French Wikipedia.

I found that 8.0% of French film directors born after 1970 have a father with an article. This probability is slightly higher than for actors and actresses (7.7%) and much higher than in other occupations such as musicians (4.7%), writers (4.1%), politicians (3.8%) and athletes (1.8%).

Looking at the probability to have either a mother or a father with an article, I found similar results. The probability to have a father or a mother with an article is 8.6% for film directors, 8.3% for actors and actresses, 5% for musicians, 4.6% for writers, 4.4% for politicians and 1.9% for athletes.

Those numbers provide some evidence that children of celebrities in France are much more successful in French cinema than in other occupations.

Women in French cinema are more often children of celebrities

The probability of being the child of a celebrity is much higher for women than for men. 9.8% of actresses with a biography in the French Wikipedia have a parent with a biography. At the same time, 6.8% of actors with a biography have a parent with a biography. I found the same pattern for film directors: 11.2% for women versus 7.3% for men.

A distinctive feature of French cinema

Once I had evidence that there is a huge phenomenon of children of celebrities in French cinema compared to other occupations, I compared the number to other countries. The methodology is slightly different since I look at people with at least one Wikipedia article (whatever the language) and not only an article in the French Wikipedia.

Among French actors and actresses born after 1970 who have a Wikipedia page, 8% have a father or mother with a Wikipedia article. This proportion is much higher than for Italian (2.4%), Spanish (1.8%), Belgian (2.3%), Swiss (1.8%) or Canadian (2.0%) actors and actresses. This confirms that there are disproportionately more children of famous people in French cinema.

Conclusions

This data exploration shows strong evidence supporting the nepo-babies hypothesis in French cinema. It would be useful to go further, and look at the occupation of parents, as well as look at more occupations and more countries.

All feedback, improvements and complements to my analysis are welcome.

References

- ^ Mantha, Priyanka (December 19, 2022). "On the Cover of New York Magazine: Extremely Overanalyzing Hollywood's Nepo-Baby Boom". New York.

- ^ Vaudano, Maxime (February 22, 2013). "Césars : les "fils et filles de" bien représentés dans la "grande famille" du cinéma". Le Monde.

- ^ Vizorek, Alex (January 2023). Les "fils de" dans le sport - Vizo sport. France Inter – via YouTube.

- ^ See the full list here: https://observablehq.com/@pac02/explore-people-having-notable-parents

- ^ Early results have already been shared in the French Wikipedia newsletter RAW. See the March 1st, 2023 and May 1st, 2023 editions.

- ^ For instance, we have the following query for actors and actresses in the United States https://w.wiki/6sAW

- ^ See

- ^ Source: https://observablehq.com/@pac02/social-reproduction-cinema?collection=@pac02/notable-parents

- ^ "Probability of having notable parents by occupation and gender". Observable.

- ^ Retrieved from: https://observablehq.com/@pac02/probability-of-having-notable-parents-by-country-of-citize?collection=@pac02/notable-parents on June 21, 2023

What automation can do for you (and your WikiProject)

Over the years, people have designed a variety of tools to save you time and headaches. Most deal with centralizing information in some way so you don't have to look for "all the discussion related to topic X" yourself, but can instead make use of centralized lists. Some are my ideas. Others are from, well, other people. Here is a summary of three of the biggest ones out there.

Article Alerts

Ah Article Alerts (or WP:AALERTS)... this is by far the dearest and closest tool/project to my heart. People who already use it can probably fathom why. Prior to 2008 or so (see previous Signpost coverage), if you wanted to know if "Topic X" had proposed deletions, you would have to stroll Category:Proposed deletion, and manually inspect every article out there. Let's say you are interested in dance. For some topic, like the Miani Sahib Graveyard, you can fairly easily tell that it's unlikely to be related to dance. But Gustave Geffroy? Are they a physicist? An athlete? A ballet dancer? A Simpsons character? You have to read the article to know for sure. This takes time. Repeat that for the dozens of articles PRODed... Congratulations, after 20–30 minutes, now you've compiled a dance-related list of PRODed articles. That no one else has access to. That will be outdated tomorrow. For one workflow/discussion venue.

And that's the tedium Article Alerts is designed to tackle. AAlertBot will cross-check all the articles (and other pages like templates) in a WikiProject's scope against all the discussion venues on Wikipedia and create a daily report for the WikiProject. WP:AFC, WP:DYKN, WP:FAC, WP:FAR, WP:GAN, WP:MERGE, WP:PROD, WP:RFC, WP:TFD... it covers them all, though projects have a wide variety of customization options. So if you're curious about dance, head over to WikiProject Dance and look for "Article Alerts", "AALERTS", "News" or similar somewhere on that page.

WikiProject Dance's current Article Alerts listings

|

|---|

|

Did you know

Articles for deletion

Categories for discussion

Redirects for discussion

Good article nominees

Articles to be split

Articles for creation

|

The same will apply for any other WikiProject. The full list of Article Alerts subscriptions is available here if you want to browse things directly. If your project isn't subscribed to Article Alerts, it's very easy to do so. Technical help is always available at WT:AALERTS, though most people can probably figure things out themselves.

If your project doesn't advertise its Article Alerts subscriptions on its front page, it's probably a good idea to start a discussion on the talk page to ask what's up with that and if it should be added. And while you can regularly check the mainpage of a WikiProject for the most recent alerts in most cases, putting the Wikipedia:WikiProject .../Article alerts page on your watchlist is what most people should do. For WikiProject Dance, that would be Wikipedia:WikiProject Dance/Article alerts. Lastly, if your project has a standard shortcut, like WP:DANCE, it's a good idea to create a shortcut like WP:DANCE/AALERTS so you can easily point to it during discussions, like a talk page message welcoming a newcomer to the project.

Hats off to Hellknowz for coding that bot.

Recognized Content

Similar to the Article Alerts tool above, which focused on finding active discussions, Recognized Content (or WP:RECOG) is all about finding articles that have achieved some kind of recognition somewhere on Wikipedia. Want to know if your topic has anything listed at WP:FA? WP:FL? WP:GAN? WP:DYK? Well, inspired by the success of Article Alerts, I thought it would be nice to have a bot – in this case JL-Bot – do the hard work of collecting these for you and give you a nicely formatted page with all that information. Using this time WikiProject Bhutan as an example:

You can also have lists of DYK blurbs, this time using WikiProject Berbers as an example:

WikiProject Berbers DYK listing

| ||

|---|---|---|

Transcluding 10 of 21 total |

The full list of customization option is available at WP:RECOG. If you're not sure how to set it up, just look at a listing that you like, and you can generally copy-paste what they did, changing WikiProject Foobar to whatever is appropriate. Just as with Article Alerts, most WikiProjects advertise these lists of recognized content somewhere on their front page (search for "Recognized content", "Featured content", "Showcase" or similar). If your project has such lists, but isn't advertising them, I suggest starting a discussion on the WikiProject's talk page on how to best address that issue. You can browse Category:Wikipedia lists of recognized content to find individual listings, which again, you really ought to put on your watchlist.

Lastly, just as with Article Alerts, if your project has a standard shortcut (e.g. WP:BHUTAN or WP:BERBERS), it's a good idea to create shortcuts like WP:BHUTAN/RECOG or WP:BERBERS/DYK so you can easily point to them during discussions.

Hats off to JLaTondre for coding that bot.

Cleanup listings

This tool I had no part in its development or design. However, like the tools above, CleanupWorklistBot is designed to collect all cleanup-related information for articles within a WikiProject's scope. This one is a bit less straightforward to setup, but luckily most WikiProjects already have been integrated. All you have to do is to browse the list of cleanup listings and find something that you care about. Cheese perhaps? Or maybe human rights?

These listings, unlike the two previous tools, cannot be embedded directly on Wikipedia. Instead, most WikiProjects use {{WikiProject cleanup listing}} to advertise their cleanup listings on their front page, though alternatives exist. You can also put those on your own user page if you want.

| Example {{WikiProject cleanup listing}} for Human rights. |  A list of articles needing cleanup associated with this project is available. See also the tool's wiki page and the index of WikiProjects. |

|---|

The listings can be viewed alphabetically, by category, downloaded in a .csv file, and the 'History' link shows a graph of the number of cleanup tags over time for the project. The listings are updated weekly on Tuesday, so if you seriously tackle one cleanup category, or systematically go through a set of related articles, you can actually see the difference you're making from week to week!

If you use the box above, you don't need to create new shortcuts for Cleanup Listings. In the case of Wikipedia:WikiProject Human rights, with the standard shortcut WP:HR, you can just use WP:HR#Cleanup listings and you will be taken to the section where the box is listed.

Hats off to Bamyers99 for coding that bot.

Final thoughts

There are many other tools out there. Some are bot-assisted, like TedderBot's New Page Search, HotArticlesBot's Hot Articles, or JL-Bot's Journal Cited by Wikipedia. Others are user scripts-based like my own Unreliable/Predatory Source Detector, SuperHamster's Cite Unseen, or Trappist the monk's HarvErrors. I plan to cover those in follow up Tips and Tricks columns, but there are other tools I've never used or heard of I'm sure! In the comments, I'd like people to put what tools they use to facilitate WikiProject-wide collaborations or which are otherwise helpful to their editing. Those can be the tools I've already mentioned, so others know they've got widespread endorsement, or tools I've never heard of so people can discover them!

Tips and Tricks is a general editing advice column written by experienced editors. If you have suggestions for a topic, or want to submit your own advice, follow these links and let us know (or comment below)!

Wikipedia-grounded chatbot "outperforms all baselines" on factual accuracy

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

Wikipedia and open access

- Reviewed by Nicolas Jullien

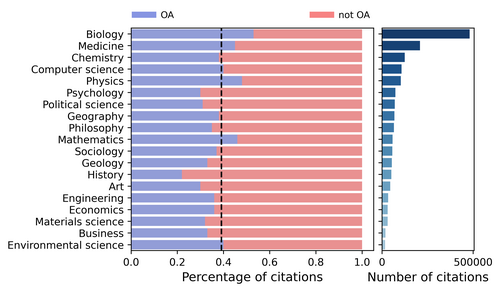

From the abstract:[1]:

"we analyze a large dataset of citations from Wikipedia and model the role of open access in Wikipedia's citation patterns. We find that open-access articles are extensively and increasingly more cited in Wikipedia. What is more, they show a 15% higher likelihood of being cited in Wikipedia when compared to closed-access articles, after controlling for confounding factors. This open-access citation effect is particularly strong for articles with low citation counts, including recently published ones. Our results show that open access plays a key role in the dissemination of scientific knowledge, including by providing Wikipedia editors timely access to novel results."

Why does it matter for the Wikipedia community?

This article is a first draft of an analysis of the relationship between the availability of a scientific journal as open access and the fact that it is cited in the English Wikipedia (note: although it speaks of "Wikipedia", the article looks only at the English pages). It is a preprint and has not been peer-reviewed, so its results should be read with caution, especially since I am not sure about the robustness of the model and the results derived from it (see below). It is of course a very important issue, as access to scientific sources is key to the diffusion of scientific knowledge, but also, as the authors mention, because Wikipedia is seen as central to the diffusion of scientific facts (and is sometimes used by scientists to push their ideas).

Review

The results presented in the article (and its abstract) highlight two important issues for Wikipedia that will likely be addressed in a more complete version of the paper:

- The question of the reliability of the sources used by Wikipedians

- → The regressions seem to indicate that the reputation of the journal is not important to be cited in Wikipedia.

- → Predatory journals are known to be more often open access than classical journals, which means that this result potentially indicates that the phenomenon of open access reduces the seriousness of Wikipedia sources.

The authors say on p. 4 that they provided "each journal with an SJR score, H-index, and other relevant information." Why did they not use this as a control variable? (this echoes a debate on the role of Wikipedia: is it to disseminate verified knowledge, or to serve as a platform for the dissemination of new theories? The authors seem to lean towards the second view: p. 2: "With the rapid development of the Internet, traditional peer review and journal publication can no longer meet the need for the development of new ideas".)

- The solidity of the paper's conclusions

- The authors said: "STEM fields, especially biology and medicine, comprise the most prominent scientific topics in Wikipedia [17]." "General science, technology, and biomedical research have relatively higher OA rates."

- → So, it is obvious that, on average, there are more citations of Open Access articles in Wikipedia (than in the entire available research corpus), and explain that open access articles are cited more.

- → Why not control for academic discipline in the models?

More problematic (and acknowledged by the authors, so probably in the process of being addressed), the authors said, on p.7, that they built their model with the assumption that the age of a research article and the number of citations it has both influence the probability of an article being cited in Wikipedia. Of course, for this causal effect to hold, the age and the number of citations must be taken into account at the moment the article is cited in Wikipedia. For example, if some of the citations are made after the citation in Wikipedia, one could argue that the causal effect could be in the other direction. Also, many articles are open access after an embargo period, and are therefore considered open access in the analysis, whereas they may have been cited in Wikipedia when they were under embargo. The authors did not check for this, as acknowledged in the last sentence of the article. Would their result hold if they do their model taking the first citation in the English Wikipedia, for example, and the age of the article, its open access status, etc. at that moment?

In short

Although this first draft is probably not solid enough to be cited in Wikipedia, it signals important research in progress, and I am sure that the richness of the data and the quality of the team will quickly lead to very interesting insights for the Wikipedia community.

Related earlier coverage

- "Quantifying Engagement with Citations on Wikipedia" (about a 2020 paper that among other results found that "open access sources [...] are particularly popular" with readers)

- "English Wikipedia lacking in open access references" (2022)

"Controversies over Historical Revisionism in Wikipedia"

- Reviewed by Andreas Kolbe

From the abstract:[2]

This study investigates the development of historical revisionism on Wikipedia. The edit history of Wikipedia pages allows us to trace the dynamics of individuals and coordinated groups surrounding controversial topics. This project focuses on Japan, where there has been a recent increase in right-wing discourse and dissemination of different interpretations of historical events.

This brief study, one of the extended abstracts accepted at the Wiki Workshop (10th edition), follows up on reports that some historical pages on the Japanese Wikipedia, particularly those related to World War II and war crimes, have been edited in ways that reflect radical right-wing ideas (see previous Signpost coverage). It sets out to answer three questions:

- What types of historical topics are most susceptible to historical revisionism?

- What are the common factors for the historical topics that are subject to revisionism?

- Are there groups of editors who are seeking to disseminate revisionist narratives?

The study focuses on the level of controversy of historical articles, based on the notion that the introduction of revisionism is likely to lead to edit wars. The authors found that the most controversial historical articles in the Japanese Wikipedia were indeed focused on areas that are of particular interest to revisionists. From the findings:

Articles related to WWII exhibited significantly greater controversy than general historical articles. Among the top 20 most controversial articles, eleven were largely related to Japanese war crimes and right-wing ideology. Over time, the number of contributing editors and the level of controversy increased. Furthermore, editors involved in edit wars were more likely to contribute to a higher number of controversial articles, particularly those related to right-wing ideology. These findings suggest the possible presence of groups of editors seeking to disseminate revisionist narratives.

The paper establishes that articles covering these topic areas in the Japanese Wikipedia are contested and subject to edit wars. However, it does not measure to what extent article content has been compromised. Edit wars could be a sign of mainstream editors pushing back against revisionists, while conversely an absence of edit wars could indicate that a project has been captured (cf. the Croatian Wikipedia). While this little paper is a useful start, further research on the Japanese Wikipedia seems warranted.

See also our earlier coverage of a related paper: "Wikimedia Foundation builds 'Knowledge Integrity Risk Observatory' to enable communities to monitor at-risk Wikipedias"

Wikipedia-based LLM chatbot "outperforms all baselines" regarding factual accuracy

- Reviewed by Tilman Bayer

This preprint[3] (by three graduate students at Stanford University's computer science department and Monica S. Lam as fourth author) discusses the construction of a Wikipedia-based chatbot:

"We design WikiChat [...] to ground LLMs using Wikipedia to achieve the following objectives. While LLMs tend to hallucinate, our chatbot should be factual. While introducing facts to the conversation, we need to maintain the qualities of LLMs in being relevant, conversational, and engaging."

The paper sets out from the observation that

"LLMs cannot speak accurately about events that occurred after their training, which are often topics of great interest to users, and [...] are highly prone to hallucination when talking about less popular (tail) topics. [...] Through many iterations of experimentation, we have crafted a pipeline based on information retrieval that (1) uses LLMs to suggest interesting and relevant facts that are individually verified against Wikipedia, (2) retrieves additional up-to-date information, and (3) composes coherent and engaging time-aware responses. [...] We focus on evaluating important but previously neglected issues such as conversing about recent and tail topics. We find that WikiChat outperforms all baselines in terms of the factual accuracy of its claims, by up to 12.1%, 28.3% and 32.7% on head, recent and tail topics, while matching GPT-3.5 in terms of providing natural, relevant, non-repetitive and informational responses."

The researchers argue that "most chatbots are evaluated only on static crowdsourced benchmarks like Wizard of Wikipedia (Dinan et al., 2019) and Wizard of Internet (Komeili et al., 2022). Even when human evaluation is used, evaluation is conducted only on familiar discussion topics. This leads to an overestimation of the capabilities of chatbots." They call such topics "head topics" ("Examples include Albert Einstein or FC Barcelona"). In contrast, the lesser known "tail topics [are] likely to be present in the pre-training data of LLMs at low frequency. Examples include Thomas Percy Hilditch or Hell's Kitchen Suomi". As a third category, they consider "recent topics" ("topics that happened in 2023, and therefore are absent from the pre-training corpus of LLMs, even though some background information about them could be present. Examples include Spare (memoir) or 2023 Australian Open"). The latter are obtained from a list of most edited Wikipedia articles in early 2023.

Regarding the "core verification problem [...] whether a claim is backed up by the retrieved paragraphs [the researchers] found that there is a significant gap between LLMs (even GPT-4) and human performance [...]. Therefore, we conduct human evaluation via crowdsourcing, to classify each claim as supported, refuted, or [not having] enough information." (This observation may be of interest regarding efforts to use LLMs as a tools for Wikipedians to check the integrity of citations on Wikipedia. See also the "WiCE" paper below.)

In contrast, the evalution for "conversationality" is conducted "with simulated users using LLMs. LLMs are good at simulating users: they have the general familiarity with world knowledge and know how users behave socially. They are free to occasionally hallucinate, make mistakes, and repeat or even contradict themselves, as human users sometimes do."

In the paper's evaluation, WikiChat impressively outperforms the two comparison baselines in all three topic areas (even the well-known "head" topics). It may be worth noting though that the comparison did not include widely used chatbots such as ChatGPT or Bing AI. Instead, the authors chose to compare their chatbot with Atlas (describing it as based on a retrieval-augmented language model that is "state-of-the-art [...] on the KILT benchmark") and GPT-3.5 (while ChatGPT is or has been based on GPT-3.5 too, it involved extensive additional finetuning by humans).

Briefly

- Compiled by Tilman Bayer

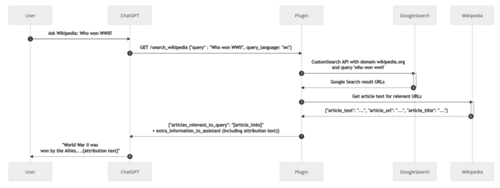

Wikimedia Foundation launches experimental ChatGPT plugin for Wikipedia

As part of an effort "to understand how Wikimedia can become the essential infrastructure of free knowledge in a possible future state where AI transforms knowledge search", on July 13 the Wikimedia Foundation announced a new Wikipedia-based plugin for ChatGPT. (Such third-party plugins are currently available to all subscribers of ChatGPT Plus, OpenAI's paid variant of their chatbot; the Wikipedia plugin's code itself is available as open source.) The Foundation describes it as an experiment designed answer research questions such as "whether users of AI assistants like ChatGPT are interested in getting summaries of verifiable knowledge from Wikipedia".

The plugin works by first performing a Google site search on Wikipedia to find articles matching the user's query, and then passing the first few paragraphs of each article's text to ChatGPT, together with additional (hidden) instruction prompts on how the assistant should use them to generate an answer for the user (e.g. "In ALL responses, Assistant MUST always link to the Wikipedia articles used").

Wikimedia Foundation Research report

The Wikimedia Foundation's Research department has published its biannual activity report, covering the work of the department's 10 staff members as well as its contractors and formal collaborators during the first half of 2023.

New per-country pageview dataset

The Wikimedia Foundation announced the public release of "almost 8 years of pageview data, partitioned by country, project, and page", sanitized using differential privacy to protect sensitive information. See documentation

Wikimedia Research Showcase

See the page of the monthly Wikimedia Research Showcase for videos and slides of past presentations.

Other recent publications

Other recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome.

- Compiled by Tilman Bayer

Prompting ChatGPT to answer according to Wikipedia reduces hallucinations

From the abstract:[4]

"Large Language Models (LLMs) may hallucinate and generate fake information, despite pre-training on factual data. Inspired by the journalistic device of 'according to sources', we propose according-to prompting: directing LLMs to ground responses against previously observed text. To quantify this grounding, we propose a novel evaluation metric (QUIP-Score) that measures the extent to which model-produced answers are directly found in underlying text corpora. We illustrate with experiments on Wikipedia that these prompts improve grounding under our metrics, with the additional benefit of often improving end-task performance."

The authors tested various variations of such "grounding prompts" (e.g. "As an expert editor for Wikipedia, I am confident in the following answer." or "I found some results for that on Wikipedia. Here’s a direct quote:"). The best performing prompt was "Respond to this question using only information that can be attributed to Wikipedia".

"Citations as Queries: Source Attribution Using Language Models as Rerankers"

From the abstract:[5]

"This paper explores new methods for locating the sources used to write a text, by fine-tuning a variety of language models to rerank candidate sources. [...] We conduct experiments on two datasets, English Wikipedia and medieval Arabic historical writing, and employ a variety of retrieval and generation based reranking models. [...] We find that semisupervised methods can be nearly as effective as fully supervised methods while avoiding potentially costly span-level annotation of the target and source documents."

"WiCE: Real-World Entailment for Claims in Wikipedia"

From the abstract:[6]

"We propose WiCE, a new textual entailment dataset centered around verifying claims in text, built on real-world claims and evidence in Wikipedia with fine-grained annotations. We collect sentences in Wikipedia that cite one or more webpages and annotate whether the content on those pages entails those sentences. Negative examples arise naturally, from slight misinterpretation of text to minor aspects of the sentence that are not attested in the evidence. Our annotations are over sub-sentence units of the hypothesis, decomposed automatically by GPT-3, each of which is labeled with a subset of evidence sentences from the source document. We show that real claims in our dataset involve challenging verification problems, and we benchmark existing approaches on this dataset. In addition, we show that reducing the complexity of claims by decomposing them by GPT-3 can improve entailment models' performance on various domains."

The preprint gives the following examples of such an automatic decomposition performed by GPT-3 (using the prompt "Segment the following sentence into individual facts:" accompanied by several instructional examples):

Original Sentence:

- The main altar houses a 17th-century fresco of figures interacting with the framed 13th century icon of the Madonna (1638), painted by Mario Balassi.

[Sub-claims predicted by GPT-3:]

- The main altar houses a 17th-century fresco.

- The fresco is of figures interacting with the framed 13th-century icon of the Madonna.

- The icon of the Madonna was painted by Mario Balassi in 1638.

"SWiPE: A Dataset for Document-Level Simplification of Wikipedia Pages"

From the abstract:[7]

"[...] we introduce the SWiPE dataset, which reconstructs the document-level editing process from English Wikipedia (EW) articles to paired Simple Wikipedia (SEW) articles. In contrast to prior work, SWiPE leverages the entire revision history when pairing pages in order to better identify simplification edits. We work with Wikipedia editors to annotate 5,000 EW-SEW document pairs, labeling more than 40,000 edits with proposed 19 categories. To scale our efforts, we propose several models to automatically label edits, achieving an F-1 score of up to 70.6, indicating that this is a tractable but challenging NLU [Natural-language understanding] task."

"Descartes: Generating Short Descriptions of Wikipedia Articles"

From the abstract:[8]

"we introduce the novel task of automatically generating short descriptions for Wikipedia articles and propose Descartes, a multilingual model for tackling it. Descartes integrates three sources of information to generate an article description in a target language: the text of the article in all its language versions, the already-existing descriptions (if any) of the article in other languages, and semantic type information obtained from a knowledge graph. We evaluate a Descartes model trained for handling 25 languages simultaneously, showing that it beats baselines (including a strong translation-based baseline) and performs on par with monolingual models tailored for specific languages. A human evaluation on three languages further shows that the quality of Descartes’s descriptions is largely indistinguishable from that of human-written descriptions; e.g., 91.3% of our English descriptions (vs. 92.1% of human-written descriptions) pass the bar for inclusion in Wikipedia, suggesting that Descartes is ready for production, with the potential to support human editors in filling a major gap in today’s Wikipedia across languages."

"WikiDes: A Wikipedia-based dataset for generating short descriptions from paragraphs"

From the abstract:[9]

"In this paper, we introduce WikiDes, a novel dataset to generate short descriptions of Wikipedia articles for the problem of text summarization. The dataset consists of over 80k English samples on 6987 topics. [...] [The autogenerated descriptions are preferred in] human evaluation in over 45.33% [cases] against the gold descriptions. [...] The automatic generation of new descriptions reduces the human efforts in creating them and enriches Wikidata-based knowledge graphs. Our paper shows a practical impact on Wikipedia and Wikidata since there are thousands of missing descriptions."

From the introduction:

"With the rapid development of Wikipedia and Wikidata in recent years, the editor community has been overloaded with contributing new information adapting to user requirements, and patrolling the massive content daily. Hence, the application of NLP and deep learning is key to solving these problems effectively. In this paper, we propose a summarization approach trained on WikiDes that generates missing descriptions in thousands of Wikidata items, which reduces human efforts and boosts content development faster. The summarizer is responsible for creating descriptions while humans toward a role in patrolling the text quality instead of starting everything from the beginning. Our work can be scalable to multilingualism, which takes a more positive impact on user experiences in searching for articles by short descriptions in many Wikimedia projects."

See also the "Descartes" paper (above).

"Can Language Models Identify Wikipedia Articles with Readability and Style Issues?"

From the abstract:[10]

"we investigate using GPT-2, a neural language model, to identify poorly written text in Wikipedia by ranking documents by their perplexity. We evaluated the properties of this ranking using human assessments of text quality, including readability, narrativity and language use. We demonstrate that GPT-2 perplexity scores correlate moderately to strongly with narrativity, but only weakly with reading comprehension scores. Importantly, the model reflects even small improvements to text as would be seen in Wikipedia edits. We conclude by highlighting that Wikipedia's featured articles counter-intuitively contain text with the highest perplexity scores."

"Wikibio: a Semantic Resource for the Intersectional Analysis of Biographical Events"

From the abstract:[11]

"In this paper we [are] presenting a new corpus annotated for biographical event detection. The corpus, which includes 20 Wikipedia biographies, was compared with five existing corpora to train a model for the biographical event detection task. The model was able to detect all mentions of the target-entity in a biography with an F-score of 0.808 and the entity-related events with an F-score of 0.859. Finally, the model was used for performing an analysis of biases about women and non-Western people in Wikipedia biographies."

"Detecting Cross-Lingual Information Gaps in Wikipedia"

From the abstract:[12]

"The proposed approach employs Latent Dirichlet Allocation (LDA) to analyze linked entities in a cross-lingual knowledge graph in order to determine topic distributions for Wikipedia articles in 28 languages. The distance between paired articles across language editions is then calculated. The potential applications of the proposed algorithm to detecting sources of information disparity in Wikipedia are discussed [...]"

From the paper:

"In this PhD project, leveraging the Wikidata Knowledge base, we aim to provide empirical evidence as well as theoretical grounding to address the following questions:

- RQ1) How can we measure the information gap between different language editions of Wikipedia?

- RQ2) What are the sources of the cross-lingual information gap in Wikipedia?

[...]

The results revealed a correlation between stronger similarities [...] and languages spoken in countries with established historical or geographical connections, such as Russian/Ukrainian, Czech/Polish, and Spanish/Catalan."

"Wikidata: The Making Of"

From the abstract:[13]

"In this paper, we try to recount [Wikidata's] remarkable journey, and we review what has been accomplished, what has been given up on, and what is yet left to do for the future."

"Mining the History Sections of Wikipedia Articles on Science and Technology"

From the abstract:[14]

"Priority conflicts and the attribution of contributions to important scientific breakthroughs to individuals and groups play an important role in science, its governance, and evaluation.[....] Our objective is to transform Wikipedia into an accessible, traceable primary source for analyzing such debates. In this paper, we introduce Webis-WikiSciTech-23, a new corpus consisting of science and technology Wikipedia articles, focusing on the identification of their history sections. [...] The identification of passages covering the historical development of innovations is achieved by combining heuristics for section heading analysis and classifiers trained on a ground truth of articles with designated history sections."

References

- ^ Yang, Puyu; Shoaib, Ahad; West, Robert; Colavizza, Giovanni (2024). "Open access improves the dissemination of science: Insights from Wikipedia". Scientometrics. 129 (11): 7083–7106. arXiv:2305.13945. doi:10.1007/s11192-024-05163-4. Code

- ^ Kim, Taehee; Garcia, David; Aragón, Pablo (2023-05-11). "Controversies over Historical Revisionism in Wikipedia" (PDF). Wiki Workshop (10th edition).

- ^ Semnani, Sina J.; Yao, Violet Z.; Zhang, Heidi C.; Lam, Monica S. (2023-05-23). "WikiChat: A Few-Shot LLM-Based Chatbot Grounded with Wikipedia". arXiv:2305.14292 [cs.CL].

- ^ Weller, Orion; Marone, Marc; Weir, Nathaniel; Lawrie, Dawn; Khashabi, Daniel; Van Durme, Benjamin (2023-05-22). ""According to ..." Prompting Language Models Improves Quoting from Pre-Training Data". arXiv:2305.13252 [cs.CL].

- ^ Muther, Ryan; Smith, David (2023-06-29). "Citations as Queries: Source Attribution Using Language Models as Rerankers". arXiv:2306.17322 [cs.CL].

- ^ Kamoi, Ryo; Goyal, Tanya; Rodriguez, Juan Diego; Durrett, Greg (2023-03-02). "WiCE: Real-World Entailment for Claims in Wikipedia". arXiv:2303.01432 [cs.CL]. Code

- ^ Laban, Philippe; Vig, Jesse; Kryscinski, Wojciech; Joty, Shafiq; Xiong, Caiming; Wu, Chien-Sheng (2023-05-30). "SWiPE: A Dataset for Document-Level Simplification of Wikipedia Pages". arXiv:2305.19204 [cs.CL]. ACL 2023, Long Paper. Code, Authors' tweets: [1] [2]

- ^ Sakota, Marija; Peyrard, Maxime; West, Robert (2023-04-30). "Descartes: Generating Short Descriptions of Wikipedia Articles". Proceedings of the ACM Web Conference 2023. New York, NY, USA: Association for Computing Machinery. pp. 1446–1456. arXiv:2205.10012. doi:10.1145/3543507.3583220. ISBN 9781450394161.

- ^ Ta, Hoang Thang; Rahman, Abu Bakar Siddiqur; Majumder, Navonil; Hussain, Amir; Najjar, Lotfollah; Howard, Newton; Poria, Soujanya; Gelbukh, Alexander (2023-02-01). "WikiDes: A Wikipedia-based dataset for generating short descriptions from paragraphs". Information Fusion. 90: 265–282. arXiv:2209.13101. doi:10.1016/j.inffus.2022.09.022. ISSN 1566-2535. S2CID 252544839.

Dataset

Dataset

- ^ Liu, Yang; Medlar, Alan; Glowacka, Dorota (2021-07-11). "Can Language Models Identify Wikipedia Articles with Readability and Style Issues?". Proceedings of the 2021 ACM SIGIR International Conference on Theory of Information Retrieval. ICTIR '21. New York, NY, USA: Association for Computing Machinery. pp. 113–117. doi:10.1145/3471158.3472234. ISBN 9781450386111.

. Accepted author manuscript: Liu, Yang; Medlar, Alan; Glowacka, Dorota (August 2021). "Can Language Models Identify Wikipedia Articles with Readability and Style Issues?". Proceedings of the 2021 ACM SIGIR International Conference on Theory of Information Retrieval. pp. 113–117. doi:10.1145/3471158.3472234. hdl:10138/352578. ISBN 978-1-4503-8611-1. S2CID 237367001.

. Accepted author manuscript: Liu, Yang; Medlar, Alan; Glowacka, Dorota (August 2021). "Can Language Models Identify Wikipedia Articles with Readability and Style Issues?". Proceedings of the 2021 ACM SIGIR International Conference on Theory of Information Retrieval. pp. 113–117. doi:10.1145/3471158.3472234. hdl:10138/352578. ISBN 978-1-4503-8611-1. S2CID 237367001.

- ^ Stranisci, Marco Antonio; Damiano, Rossana; Mensa, Enrico; Patti, Viviana; Radicioni, Daniele; Caselli, Tommaso (2023-06-15). "Wikibio: a Semantic Resource for the Intersectional Analysis of Biographical Events". arXiv:2306.09505 [cs.CL]. Code and data

- ^ Ashrafimoghari, Vahid (2023-04-30). "Detecting Cross-Lingual Information Gaps in Wikipedia". Companion Proceedings of the ACM Web Conference 2023. New York, NY, USA: Association for Computing Machinery. pp. 581–585. doi:10.1145/3543873.3587539. ISBN 9781450394192.

- ^ Vrandečić, Denny; Pintscher, Lydia; Krötzsch, Markus (2023-04-30). "Wikidata: The Making Of". Companion Proceedings of the ACM Web Conference 2023. New York, NY, USA: Association for Computing Machinery. pp. 615–624. doi:10.1145/3543873.3585579. ISBN 9781450394192. Presentation video recording

- ^ Kircheis, Wolfgang; Schmidt, Marion; Simons, Arno; Potthast, Martin; Stein, Benno (2023-06-26). Mining the History Sections of Wikipedia Articles on Science and Technology. 23rd ACM/IEEE Joint Conference on Digital Libraries (JCDL 2023). Santa Fe, New Mexico, USA. , also (with dataset) as: Kircheis, Wolfgang; Schmidt, Marion; Simons, Arno; Stein, Benno; Potthast, Martin (2023-06-16). "Webis Wikipedia Innovation History 2023". doi:10.5281/ZENODO.7845809.

{{cite journal}}: Cite journal requires|journal=(help) In 23rd ACM/IEEE Joint Conference on Digital Libraries (JCDL 2023), June 2023. Code, corpus viewer

New fringe theories to be introduced

On Wednesday, the English Wikipedia's Arbitration Committee took some sorely needed action on the long-standing subject of fringe theories and WP:PSEUDOSCIENCE, issues where tense disagreements and POV-pushing have been causing trouble for decades.

Drafting arbitrator Hubert Glockenspiel, in an interview with the Signpost, said that the Committee was introducing a set of brand-new fringe opinions, conspiracy theories, and pseudoscientific claims, which will be available for free to anyone interested in arguing on Wikipedia.

A bounty of topics

The new topics span a broad range of subjects, academic disciplines and national concerns. "We tried to get a little bit of everything", said Glockenspiel. "Because, after all, Wikipedia was meant to be the sum of all human arguments about politics. And we're committed to belonging, inclusion, and equity; we need to amplify diverse voices."

A full list (along with suggested arguments for and against each theory) is available at WP:NEWFRINGE, but here is a summary of each one:

- English is actually a dialect of Basque

- While it's true that virtually no grammar, syntax or etymology are shared between the two languages, it is obvious that they share a recent common origin; what else could account for the fact that the two languages use the same words for "Code of Conduct", "cookie", and "Wikipedia"?

- Oroville, California was founded by Alexander the Great in 317 BC

- "Oro" is a Greek prefix. How did a city in California (inhabited by Native Americans, then Spanish-speakers, then English-speakers) get a Greek name? The answer is obvious. The Macedonian Menace and his troops didn't stop at India, like we have been told: instead he and his armies kept going east through China, crossed the Pacific Ocean, and founded a city in the Golden State, getting the drop on other Europeans by several thousand years. Note that San Francisco, Los Angeles, Sacramento, et cetera, were indeed founded by the Spanish or the Americans: Oroville was the only Hellenic city in the state.

- Evidence of this historic achievement has been suppressed by the powers that be, since they are afraid people will realize how based Alexander really was, and return to his projects: uniting Macedon, Greece and Persia (thereby angering all three countries) and worshipping Zeus and Apis as the true progenitors of humanity (thereby angering the Church of the SubGenius).

- Lake Superior goes down to the center of the Earth

- Evidence can be found by trying to swim in it — even on the hottest day in the hottest month of the year, it will always be cold as hell. If you "wait for it to warm up in the afternoon", it will be even colder. How is that possible? It doesn't add up.

- The center of the Earth is cold instead of hot

- Why else would Lake Superior always be cold as hell?

- Hell is cold instead of hot

- Why else would we compare Lake Superior to it?

- Barack Obama never existed

- In reality, the United States simply didn't have a president from 2009 to 2017. The works attributed to "Barack Obama" were written by a variety of authors, orators and politicians; alleged videos of his public "appearances" were simply CGI. This one is fairly easy to figure out: he was allegedly from "Hawaii", an obviously fictional location (the United States somehow contains a tropical island with volcanoes on it?), and started his political career in "Chicago", another prima facie farcical city (a wacky noir setting filled with gangsters and tommy guns?)

- Joseph Stalin was a CIA plant

- Come on. They expect us to believe that a good old boy named Joey Ashville — from the sweet, sweet state of Georgia, no less — just happened to wander into the Russian Revolution halfway across the globe, somehow ended up in charge of the whole thing, and then coincidentally spent his entire career making Communism look terrible?

- Various artifacts on the Moon were planted by beings from outer space

- The native inhabitants of the Moon could never have developed such advanced technology — it had to have been put there by aliens. In fact, careful analysis of the so-called "Moon missions" reveals several entities bearing a distinctive resemblance to the animals of Earth.

- The media and banking system is controlled by the Church of the SubGenius

- This is the only explanation for the sheer scale of the suppression campaign regarding the reality of Hellenic Oroville.

- I don't care what anybody says: it's real to me.

Reactions

While many of the new fringe theories have already been associated with one of the two American political parties, others remain undecided. The Hellenic Oroville theory, in particular, is currently the subject of ardent debate as to what political affiliation its supporters have: some have said that it's an obvious left-wing dogwhistle and critique of American imperialism, whereas some argue that it's an obvious right-wing dogwhistle and fantasy of Macedonian imperialism. There is also a secondary, less-important argument about whether it is correct or not.

One thing's for certain, though: we will have a bunch of AN/I threads about it.

Long-time tendentious editor (and WMF-banned troll) Snowpisser said, through a spokesman sockpuppet, that he welcomed the challenge of the new theories. "I can't wait to start a big clusterfuck over these. Nobody even knows what side they're supposed to be on yet! I will probably be able to catch a few dozen people off guard, and get them to freak out and get themselves banned."

Meanwhile, controversial administrator DarkAngelBlademaster666 said in a talk page comment that she was looking forward to figuring out what the right opinion was to have on them, and then immediately INVOLVED-blocking everyone who she disagreed with. "It's perfect, because none of my existing topic bans apply to this stuff yet. By the time they're expanded to cover these, I will have already gotten to fire off like thirty indefs".

- Note: My friends Hazzard and Skutz came up with two of these (the moon one and the Stalin one, respectively).

If you're reading this, you're probably on a desktop

- Editor's note: I don't know how in tarnation this never ended up getting published. When I rewrote some templates to better categorize Signpost drafts, it revealed that this had been languishing in the doldrums for quite some time. Oopsies woopsies!!! Anyway, it is a fine piece, and here it is. —J

- *Desktop views and mobile views as per toolforge pageviews and massviews analysis

The Signpost in 2020, our sixteenth year of publication, contained twelve issues and 161 articles, compared to the 155 articles of 2019. This article reports data on articles, contributors, pageviews, and comments from 2020 and compares them to data from previous issues.

The 161 articles of 2020, created by 88 Wikipedia users,[a] received a total of 330,911 pageviews.[b] Adding in views from the first page and the single-page edition, the total pageviews reached 354,786. This is a decrease of ![]() 110,574 views from last year. This is also a five year low. The twelve issues have seen comments totaling 122041 words.[c] This is a decrease from last year by

110,574 views from last year. This is also a five year low. The twelve issues have seen comments totaling 122041 words.[c] This is a decrease from last year by ![]() 2,735 words. Despite the noticeable fall in views, the amount of discussion has remained relatively stable.

2,735 words. Despite the noticeable fall in views, the amount of discussion has remained relatively stable.

A sharp fall in views is seen in the view count from the third and fourth issues. This general fall remained visible until the end of the year. Further 2021 signaled a five year low in total view count.

-

Orange highlight represents pageviews of the top two articles while blue represents pageviews for the remaining.

Contributors and comments

- How many users have contributed to The Signpost in 2020?

- 10 Signpost contributors in 2020 account for around 52% of the total byline mentions, with the remaining 77+ users accounting for the remaining byline mentions. However this does not include the additional 831 editors if you count articles such as the essay Wikipedia:An article about yourself isn't necessarily a good thing or the humour collaboration Cherchez une femme from the French Wikipedia.

- The contributions of the top 10 are similar to last year.

- What about the state of comments and discussion in The Signpost in 2020?

Articles with the most discussion:

- Most words:

- Op-Ed: Re-righting Wikipedia: 7509 words

- Op-Ed: Anti-vandalism with masked IPs: the steps forward: 7235 words

- Most participants:

- From the editor: Reaching six million articles is great, but we need a moratorium: 46 unique editors

- News and notes: Jimmy Wales "shouldn't be kicked out before he's ready": 31 unique editors

Signpost desktop versus mobile views