Are Luddaites defending the English Wikipedia?

In response to the increased prevalence of generative artificial intelligence, some editors of the English Wikipedia have introduced measures to reduce its use within the encyclopedia. Using images generated from text-to-image models on articles is often discouraged, unless the context specifically relates to artificial intelligence. A hardline Luddaite approach has not been adopted by all Wikipedians and AI-generated images are used in some articles in non-AI contexts.

Paintings in medical articles

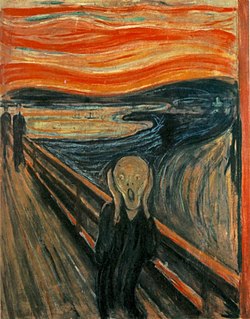

The image guidelines generally restrict the use of images that are solely for decorative purposes, as they do not contribute meaningful information or aid the reader in understanding the topic. Despite this restriction, it appears that paintings are permitted to be included in medical articles to display human-made artistic interpretations of medical themes. They offer historical and cultural perspectives related to medical topics.

-

Venus with a Mirror, by Titian on Body image

-

The Scream, by Edvard Munch on Anxiety disorder. This painting is regarded as a symbol of the anxiety of the human condition.

-

The Imaginary Illness, by Honoré Daumier on Hypochondriasis

-

Man with Delusions of Military Command, by Théodore Géricault on Delusional disorder

-

Melancholia, by Tadeusz Pruszkowski on Anhedonia

-

Dance at Molenbeek, by Pieter Brueghel the Younger on Mass psychogenic illness

WikiProject AI Cleanup

WikiProject AI Cleanup searches for AI-generated images and evaluates their suitability for an article. If any images are deemed inappropriate, they may be removed to ensure that only relevant and suitable images are kept in articles.

-

Removed from Shrinkage (accounting) as a real-life alternative could be used instead.

-

Removed from Kemonā for being unnecessarily explicit.

-

Removed from Darul Uloom Deoband for bad anatomy and risk of being mistaken for a contemporary work (the college was founded in 1866).

-

Removed from The Moon is made of green cheese because a historical illustration was already used in the article.

Perhaps the removed "scientific" images are the worst ones, however, even if they only affected one article, Chemotactic drug-targeting:

-

This is supposedly an amoeba moving; it looks more like a sperm cell, if anything. Amoeboid movement and a flagellum are fundamentally different movement techniques, and this looks much more like the latter. Prompt apparently was "an ameba moving toward a food source through the process of chemotaxis". Chemotaxis is movement in response to chemicals in the surrounding environment and needs a lot more creativity to illustrate.

-

This image inaccurately shows a red tumour on a cell. Cancer cells don't get cancerous tumours on them, they form tumours in aggregate. This is probably based on a cross between a tumour and images of T lymphocytes attacking cancerous cells, but the combination created nonsense. The prompt was apparently a "cancer cell and its abnormal growth", but that's not meant to be growths on the cell itself.

-

Supposedly leukocytes, but leukocytes aren't crystal orbs with vaguely red-blood cell shapes around and inside them. This one is more subtly wrong (it knows there should be something inside, and there's usually some red blood cells mixed in with them in photos...), which arguably makes it more insidious. This just isn't as blatantly bad, making it easier to mistake it as a real image.

It may also be worth considering what kind of AI art is being left in articles by the WikiProject:

-

Advertisement for "Willy's Chocolate Experience", a disastrous event in Glasgow, Scotland that used AI for all its promotional material. Without cropping out the fake words. A pasadise of sweet teats, indeed!

-

Succeeds in illustrating pastoral science fiction in a way that captures important ideas of sustainable structures.

-

These somewhat deformed buildings are apparently typical of ways the meme/hoax country of Listenbourg was depicted using... well, AI images.

AI-generated images on Wikipedia articles in non-AI contexts

- Note: The following section is accurate as of the day before publication.

Policies vary between different language versions of Wikipedia. Differences in opinion among Wikipedians have resulted in the inclusion of text-to-image model-generated images on several Wikipedias, including the English Wikipedia. Many Wikipedias use Wikidata to automatically display images, which takes place beyond the scope of local projects.

-

22nd century [ro] on the Romanian Wikipedia

-

Anachronism [no] on the Norwegian Wikipedia

-

Archimedes [ar] on the Arabic Wikipedia

-

Arcturians (New Age) on the English Wikipedia

-

Battle of Dhu al-Qassah [fr] on the French Wikipedia

-

Chuan Ralla [an] on the Aragonese Wikipedia

-

Colonization of Mars [fr] on the French Wikipedia

-

Darul Uloom Deoband [ks] on the Kashmiri Wikipedia

-

Global catastrophic risk [ha] on the Hausa Wikipedia

-

The Great God Pan [he] on the Hebrew Wikipedia

-

Gwyn ap Nudd [fr] on the French Wikipedia

-

Invisible religion [cs] on the Czech Wikipedia

-

John F. Kennedy [rw] on the Kinyarwanda Wikipedia

-

LHS 1140 b [ru] on the Russian Wikipedia

-

Pastoral science fiction on the English Wikipedia

-

Preiddeu Annwfn [ru] on the Russian Wikipedia

-

Raposa do aire [gl] on the Galician Wikipedia

-

Religious delusion [nl] on the Dutch Wikipedia

-

Rendlesham Forest incident [es] on the Spanish Wikipedia

-

Silurian hypothesis [nl] on the Dutch Wikipedia

-

Space travel in science fiction, recently removed from the English Wikipedia

-

Sundiata Keita [es], recently removed from the Spanish Wikipedia

-

Technosignature [es] on the Spanish Wikipedia

-

Twin paradox on the English Wikipedia

-

A Voyage to Arcturus [he] on the Hebrew Wikipedia

-

World Poetry Day [d] on many Wikipedias via Wikidata

-

Xan Quinto [ca] on the Catalan Wikipedia

![22nd century [ro] on the Romanian Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/5/58/Cyborg_blue_neon_background.png/500px-Cyborg_blue_neon_background.png)

![Abchanchu [fr] on the French Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/a/a1/Abchanchu.png/500px-Abchanchu.png)

![Afanc [fr] on the French Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/8/86/Afanc.jpg/500px-Afanc.jpg)

![Anachronism [no] on the Norwegian Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/5/5d/Abraham_Lincoln_at_Air_Force_One.jpg/500px-Abraham_Lincoln_at_Air_Force_One.jpg)

![Archimedes [ar] on the Arabic Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/0/00/%D8%B3%D9%82%D8%B1%D8%A7%D8%B7_%D8%A8%D9%88%D8%A7%D8%B3%D8%B7%D8%A9_%D8%A7%D9%84%D8%B0%D9%83%D8%A7%D8%A1_%D8%A7%D9%84%D8%A7%D8%B5%D8%B7%D9%86%D8%A7%D8%B9%D9%8A.png/500px-%D8%B3%D9%82%D8%B1%D8%A7%D8%B7_%D8%A8%D9%88%D8%A7%D8%B3%D8%B7%D8%A9_%D8%A7%D9%84%D8%B0%D9%83%D8%A7%D8%A1_%D8%A7%D9%84%D8%A7%D8%B5%D8%B7%D9%86%D8%A7%D8%B9%D9%8A.png)

![Battle of Dhu al-Qassah [fr] on the French Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/1/16/History_of_the_Arab_Conquest_01.png/500px-History_of_the_Arab_Conquest_01.png)

![Chuan Ralla [an] on the Aragonese Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/2/27/Chuan_Ralla.png/500px-Chuan_Ralla.png)

![Cleopatra [cs] on the Czech Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/b/b2/AI-Generated_Interpretation_of_Cleopatra_VII.jpg/500px-AI-Generated_Interpretation_of_Cleopatra_VII.jpg)

![Climate fiction [fr][uk] on the French and Ukrainian Wikipedias](http://upload.wikimedia.org/wikipedia/commons/thumb/b/b3/Cyberpunk_city_with_not_enough_funds_to_protect_against_the_rising_sea%2C_using_the_polluted_water_for_cooler_air.jpg/500px-Cyberpunk_city_with_not_enough_funds_to_protect_against_the_rising_sea%2C_using_the_polluted_water_for_cooler_air.jpg)

![Colonization of Mars [fr] on the French Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/8/86/A_city_on_Mars_under_a_glass_dome_with_forests%2C_fields%2C_lakes%2C_houses.jpg/500px-A_city_on_Mars_under_a_glass_dome_with_forests%2C_fields%2C_lakes%2C_houses.jpg)

![Cosso [gl] on the Galician Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/6/6e/Cosso_%28deus%29_-_IA_Midjourney_versi%C3%B3n_1.png/500px-Cosso_%28deus%29_-_IA_Midjourney_versi%C3%B3n_1.png)

![Cyberpunk [hu] on the Hungarian Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/2/20/City_Scene_in_the_style_of_Blade_Runner_01.jpg/500px-City_Scene_in_the_style_of_Blade_Runner_01.jpg)

![Dagon [he] on the Hebrew Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/b/b9/StupendousMonsterOfNightmares.png/500px-StupendousMonsterOfNightmares.png)

![Danmei [fr] on the French Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/d/d1/Two_danmei_husbands.jpg/500px-Two_danmei_husbands.jpg)

![Dystopia [es] on the Spanish Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/1/12/Dystopia.png/500px-Dystopia.png)

![Global catastrophic risk [ha] on the Hausa Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/8/87/Post-apocalyptic_landscape_on_an_industrial_exoplanet_%28recently_collapsed_civilization%29.jpg/500px-Post-apocalyptic_landscape_on_an_industrial_exoplanet_%28recently_collapsed_civilization%29.jpg)

![Golem [es] on the Spanish Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/0/0e/AI_golem_waiting_for_tasks_and_providing_advice.jpg/500px-AI_golem_waiting_for_tasks_and_providing_advice.jpg)

![The Great God Pan [he] on the Hebrew Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/c/ca/YoungGirlInWhitesSittingInLaboratory.png/500px-YoungGirlInWhitesSittingInLaboratory.png)

![Gwyn ap Nudd [fr] on the French Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/2/2c/Gwynn_ap_Nudd.jpg/250px-Gwynn_ap_Nudd.jpg)

![Invisible religion [cs] on the Czech Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/0/0a/Invisible_Religion_Drawing_AI.jpg/500px-Invisible_Religion_Drawing_AI.jpg)

![Iratxoak [ca] on the Catalan Wikipedia](http://upload.wikimedia.org/wikipedia/commons/b/b4/Galtzagorri_%28euskal_mitologia%29_-_Midjourney_AI_bertsioa.png)

![John F. Kennedy [rw] on the Kinyarwanda Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/a/a1/John_F_Kennedy_in_watercolour.png/500px-John_F_Kennedy_in_watercolour.png)

![LHS 1140 b [ru] on the Russian Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/1/1b/Possible_depiction_of_LHS_1140_b_landscape_with_black_grass_and_red_starlight.jpg/500px-Possible_depiction_of_LHS_1140_b_landscape_with_black_grass_and_red_starlight.jpg)

![Mikelats [ca][fr] on the Catalan and French Wikipedias](http://upload.wikimedia.org/wikipedia/commons/thumb/6/69/Mikelats_%28euskal_mitologia%29_-_Midjourney_AI_bertsioa.png/500px-Mikelats_%28euskal_mitologia%29_-_Midjourney_AI_bertsioa.png)

![Mooring [sv] on the Swedish Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/1/1f/Ang%C3%B6ring.jpg/500px-Ang%C3%B6ring.jpg)

![Preiddeu Annwfn [ru] on the Russian Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/1/1e/Kaer_sidi.jpg/330px-Kaer_sidi.jpg)

![Raposa do aire [gl] on the Galician Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/3/38/A_raposa_de_Mor%C3%A1s.jpg/500px-A_raposa_de_Mor%C3%A1s.jpg)

![Religious delusion [nl] on the Dutch Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/f/f7/A_sign_from_God.png/500px-A_sign_from_God.png)

![Rendlesham Forest incident [es] on the Spanish Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/d/d9/Rendlesham_Forest_UFO_incident_digital_CCBY_artwork.jpg/500px-Rendlesham_Forest_UFO_incident_digital_CCBY_artwork.jpg)

![Science fantasy [eu][es] on the Basque and Spanish Wikipedias](http://upload.wikimedia.org/wikipedia/commons/thumb/2/20/An_elbish_city_on_an_exoplanet_%28science_fantasy%29.jpg/500px-An_elbish_city_on_an_exoplanet_%28science_fantasy%29.jpg)

![Security hacker [ar][es][he][ks][ko] on the Arabic, Spanish, Hebrew, Kashmiri, and Korean Wikipedias](http://upload.wikimedia.org/wikipedia/commons/thumb/b/ba/Anonymous_Hacker.png/500px-Anonymous_Hacker.png)

![Silurian hypothesis [nl] on the Dutch Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/e/ea/Silurian_hypothesis.jpg/500px-Silurian_hypothesis.jpg)

![Silurian hypothesis [fr][es] on the French and Spanish Wikipedias](http://upload.wikimedia.org/wikipedia/commons/thumb/3/3c/The_annihilated_civilization.jpg/500px-The_annihilated_civilization.jpg)

![Sundiata Keita [es], recently removed from the Spanish Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/5/56/Emperor_Sundiata.png/330px-Emperor_Sundiata.png)

![Technosignature [es] on the Spanish Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/9/9e/A_colonized_comet_with_a_long_abandoned_reception_center_above_underground_facility_%28AI_art%29.jpg/500px-A_colonized_comet_with_a_long_abandoned_reception_center_above_underground_facility_%28AI_art%29.jpg)

![Unicorn [pt] on the Portuguese Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/8/87/Unicorn2024.png/500px-Unicorn2024.png)

![A Voyage to Arcturus [he] on the Hebrew Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/d/dc/VoyageToArcturusSeance.png/500px-VoyageToArcturusSeance.png)

![World Poetry Day [d] on many Wikipedias via Wikidata](http://upload.wikimedia.org/wikipedia/commons/thumb/8/89/Elementos_representativos_de_la_poes%C3%ADa%2C.jpg/500px-Elementos_representativos_de_la_poes%C3%ADa%2C.jpg)

![Xan Quinto [ca] on the Catalan Wikipedia](http://upload.wikimedia.org/wikipedia/commons/thumb/a/ae/Xan_Quinto_-_IA_Midjourney_versi%C3%B3n_1.png/330px-Xan_Quinto_-_IA_Midjourney_versi%C3%B3n_1.png)

Discuss this story

Well, I liked the Lincoln/Anachronism image. Other than that I didn't see 1 AI image here that I liked or would have found useful in any encyclopedia. Smallbones(smalltalk) 21:16, 26 September 2024 (UTC)[reply]

3 No discussion of diffusion engine-created images is complete without noting that the companies that own and control such programs rest their software on a foundation of unpaid labor: the unlicensed use of artists' creative work for training the software. The historical Luddites were smeared as technophobes as a way to deflect their concerns about labor expropriation by a wealthy class who held the means of production, and at least this 'Luddaite' thinks we as a project should stay far away from these images when there are still all too many unresolved issues around labor and licensing underlying much of the software involved. Hydrangeans (she/her | talk | edits) 22:25, 26 September 2024 (UTC)[reply]

It is fascinating to see these two topics side-by-side. In general it asks us what images in our articles are for. As per other commenters, I'm happy see AI images on Wikipedia be minimized as much as possible. I am quite fond of the use of paintings, though I do worry about a certain European bias in them. ~Maplestrip/Mable (chat) 09:09, 27 September 2024 (UTC)[reply]

Thanks for pointing out these images. I have removed some of them on the French wiki and labelled other as AI generated in their caption on the articles. Skimel (talk) 13:43, 27 September 2024 (UTC)[reply]

No one linked to the relevant SMBC comic yet? Polygnotus (talk) 16:26, 30 September 2024 (UTC)[reply]

The "Pastoral science fiction" pitcure appears twice, so captioned both times. Maproom (talk) 21:40, 11 October 2024 (UTC)[reply]

- Thanks for letting me know. This wasn't a mistake, because Adam Cuerden worked on that section, while I worked on the others. I did remove it, but I later restored it as I didn't want to delete someone else's work. Svampesky (talk) 17:12, 12 October 2024 (UTC)[reply]

That Cleopatra image is exactly why I'm concerned about AI media on Commons. It's the default image used when this article is shared on social media. Out of context, does it over time become the default representation of the subject? Preferred over other art representing the subject? Used because it's the most "interesting"? The image has no basis in reality. There's no research into what dress folks wore back then, no careful representation of ethnicity or culture, it perpetuates modern beauty standards, etc. It's a refinement of noise from a sludge of data. Ckoerner (talk) 16:26, 21 October 2024 (UTC)[reply]