Building a pantheon of scientists from Wikipedia and Google Books

On 14 January 2011, Science, one of the world's most prominent scientific journals, published a "Science Hall of Fame" (SHoF),[1] which they described as "a pantheon of the most famous scientists of the past 2 centuries". Unlike in traditional assessment of "fame" and "influence", which usually relies on polls and the opinion of experts in the field, Science has opted for an objective approach based on how many times people's names are found in the digitized copies of books available on Google Books, and whether Wikipedia considered these people scientists.

The Signpost takes a look at what they did and reports some of the trends in the data.

Origins and overview

The origin of the SHoF was made possible by a paper written by Jean-Baptiste Michel and Erez Lieberman Aiden and published on 17 December 2010.[2] Michel and Aiden aggregated data from Google Books, which at the time contained 15 million digitized books—roughly 12% of all books ever published. Filtering for quality revealed that about a third of the original data was suitable for analysis; this is available online at ngrams.googlelabs.com. Based on Michel and Aiden's work, John Bohannon from Science and Adrian Veres from Harvard University teamed up to create a pantheon of the most influential scientists as measured by the number of times their names appear in Google Books. However, millions of names can be found in books, and thus a way was needed to decide who is a scientist and who is not. This is where Wikipedia comes in, with its 900,000 biographical entries (the authors report 750,000),[3][4] which can then be searched using for science-related categories, science-related keywords, and years of birth and death.[5]

They have created a new unit, the darwin (D), defined as "the average annual frequency that 'Charles Darwin' appears in English-language books from the year he turned 30 years old (1839) until 2000". Scientists named more often than Darwin himself would have a fame greater than 1 darwin. However, as few people were as influential as Darwin, the millidarwin (mD; a thousandth of a Darwin) is used instead. As it turns out, only three people beat Darwin in terms of fame as measured by this metric: John Dewey (1752.7 mD), Bertrand Russell (1500.1 mD), and Sigmund Freud (1292.9 mD). Other famous figures, such as Albert Einstein (878.2 mD), Marie Curie (188.6 mD), and Louis Pasteur, (237.5 mD) rank lower.[6] This is not a measure of the impact of their scientific work, but rather of how often they are mentioned in all types of books. For example, a scientist could have a moderate scientific impact but be famous for political involvement or even for negative scientific impact, such as involvement in scientific fraud or high-profile pseudoscience.

The authors warn that the current version of the Science Hall of Fame is a rough draft subject to further refinements; not all fields are covered equally and some scientists were excluded for technical reasons. Further details are on the Science Hall of Fame website, especially their FAQ section. As an aside, the authors called this an experiment in "culturomics" (the analysis of large sets of data to find cultural trends), which has been dubbed by the American Dialect Society as the "least likely to succeed" word of 2010.[7] It will be interesting to see if the word catches on, or if the culturomics link will remain red or turn blue.

Fame, article quality, and other trends on Wikipedia

Based on this measure of fame as established by Bohannon and Veres, a comparison of Wikipedia with the SHoF (WP:SHOF) was created by Snottywong, based on a suggestion from this article's writer. The comparison lists scientists, along with their fame in mD and years of birth and death as reported by Science, as well as years of birth and death as reported by Wikipedia and assessment ratings (taken from the {{WikiProject Biography}} banner). Since the SHoF remains a rough draft at the moment, a highly-rigourous analysis of its findings would be pointless at this stage; however, some things are worth noting.

- Numbers

First, some numbers. As of writing ...

- ... the SHoF contains 5,631 entries

- ... of these, 1,783 are living, 3,848 are dead

- ... the breakdown of these articles is 14 FA-class, 13 GA-class, 238 B-class, 246 C-class, 2011 Start-class, 2479 Stub-class; 630 articles are unassessed

- ... Wikipedia is missing only two articles: Herbert Mayer, which was deleted on CSD A7/BLP grounds on 9 January 2011, and Jacob Jaffe, deleted 9 December 2010 for lack of notability through AfD

- ... the Science compilation missed 42 deaths (34 of which occurred in 2010 and 2011—possibly after the data was compiled)

- ... Wikipedia articles are missing nine births, but no deaths

- ... there are 18 discrepancies between births and deaths, other than missing dates; the first date is from Science, the second from Wikipedia

- Jim Fowler b. 1930 vs 1932

- Leon Kamin b. 1924 vs 1927

- Alexander Rich b. 1920 vs 1924

- Julius H. Taylor b. 1918 vs 1914

- David Sidney Feingold b. 1914 vs 1922

- Henry Kolm b. 1920 vs 1924

- John Beddington b. 1946 vs 1945

- Carolyn S. Gordon b. 1940 vs 1950

- Haidar Abbas Rizvi b. 1969 vs 1967

- Ishtiaq Hussain Qureshi d. 1981 vs 1988

- Lester R. Ford d. 1975 vs 1967

- Carl Chun d. 1914 vs 1938

- Loránd Eötvös d. 1947 vs 1919

- Michio Suzuki d. 1999 vs 1998

- Hu Ning d. 1916 vs 1997

- Emil Petrovici d. 1958 vs 1968

- Michael Grzimek b. 1909 vs 1934, d. 1987 vs 1959

The high degree of "completion" or of "accuracy" should not be considered a sign that Wikipedia is "complete" or "accurate", because the authors used Wikipedia to determine whether people were scientists or not and possibly used dates from Wikipedia articles. People who lack a Wikipedia entry would presumably be excluded on "technical grounds". It is also possible that the discrepancies merely reflect changes in Wikipedia due to vandalism, mistakes, and corrections which occurred since the data was acquired.

- Fame and quality

How, then, are article quality and fame related? Number crunching by The Signpost revealed the following.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

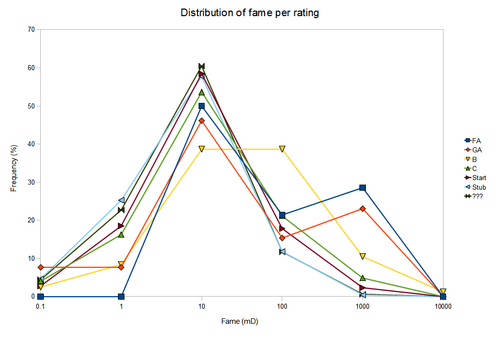

We can see that as the quality of articles increases, so does the mean fame of the scientists within the assessment class. Another way to look at this is through the distribution of fame within assessment classes: as the quality increases, the distribution of fame shifts towards higher fame – that is, higher-quality articles tend to be about more famous people. While fame correlates to some extent with quality, it is still in no way a guarantee that a famous person will have a high-quality article on Wikipedia (or vice-versa). There is not much else to say about these rather unsurprising results, except perhaps to mention that the distribution of unassessed articles most closely matches that of stubs.

- Gender and quality

We can also take a look at some trends. For gender, the rankings of the top 10 men and top 10 women look like this.

|

|

Unsurprisingly, the top 10 men have higher fame than the top 10 women. At first glance, it seems there is an enormous disparity between the relative quality of articles on men (1 FA, 1 GA, 5 B, 1 C, 2 Start, 0 Stub) compared to those on women (0 FA, 0 GA, 1 B, 0 C, 6 Start, 3 Stub). However that conclusion is not strongly supported at the moment. Since quality & fame are somewhat correlated, it would be natural for the top 10 women (who are on average, less famous) to have articles of somewhat lower quality, although the discrepancy here seems to be too big to be entirely explainable only by lower fame. Instead, what seems to be the top indicator of quality is a combination of fame plus field. Many of the men in the top 10 ranking come from hard sciences and philosophy, while most of the women come from humanities (especially feminism and psychology/psychiatry). Indeed, the two lower-ranked articles (Start-class) for men concern psychologists (Havelock Ellis and G. Stanley Hall), and the only one above Start-class for women is for a physicist (Marie Curie).

Based on this, a more sensible conclusion would be that famous people from the humanities are under-represented on Wikipedia, compared to other fields. However, even that conclusion should not be embraced blindly. After all, it relies on a very small sampling (10 men, 10 women). Someone interested in doing a rigourous analysis of the data would have to weight ratings scores according to fame and year of birth, classify people according to the fields for which they are famous, and make sure the ratings are up to date. For example, the articles on Anna Freud and Melanie Klein could arguably be rated as a C-class instead of their current Start-class, and Karen Horney is rated B-class by WikiProject Psychology but the WikiProject Biography rating has not yet been updated, and is still a Start-class. And lastly, there is possibly a bias in the Google Books selection. Books from certain fields could be digitized more often than books from other fields, or the writing conventions of the field could make full names more prevalent than in other fields, boosting the "measured fame" compared to their "actual fame".

Closing remarks

The above analysis just gives a hint of the type of questions that can be asked and answered by analysis of the SHoF data. It will be very interesting to see if concerted efforts to improve coverage in fields which are lacking will take place, or see if the gender gap truly exists, or if it is the result of a coverage bias amongst fields. It will be equally interesting to see if culturomics will take off as a field and what direction it will take.

Got comments or ideas of your own? Share them below!

References

- ^ J. Bohannon (2011). "The Science Hall of Fame". Science. 331 (6014): 143. doi:10.1126/science.331.6014.143-c.

- ^ J.-B. Michel; et al. (2010). "Quantitative analysis of culture using millions of digitized books". Science. 331 (6014): 176. doi:10.1126/science.1199644. PMID 21163965.

- ^

WP 1.0 bot (23 January 2011). "Wikipedia:Version 1.0 Editorial Team/Biography articles by quality statistics". Retrieved 2011-01-27.

{{cite web}}: CS1 maint: numeric names: authors list (link) - ^ J. Bohannon, A. Veres. "FAQ: How are the members of the Hall of Fame chosen?". The Science Hall of Fame. Retrieved 2011-01-27.

- ^ J. Bohannon, A. Veres. "FAQ: How are the members of the Hall of Fame chosen?". The Science Hall of Fame. Retrieved 2011-01-27.

- ^ Snottywong, Headbomb (25 January 2011). "Science Hall of Fame". WikiProject Biography. Retrieved 2011-01-27.

- ^ ""App" 2010 Word of the Year, as voted by American Dialect Society" (PDF) (Press release). American Dialect Society. 7 January 2011. Retrieved 2011-01-27.

Discuss this story

What rankings are Herbert Mayer and Jacob Jaffe on the list? Perhaps we should consider recreating those articles. NW (Talk) 01:47, 1 February 2011 (UTC)[reply]